Nils T Siebel

Autonomous Robot Systems

This page gives an overview of my projects related to the visual control of autonomous robot systems.

1 Introduction

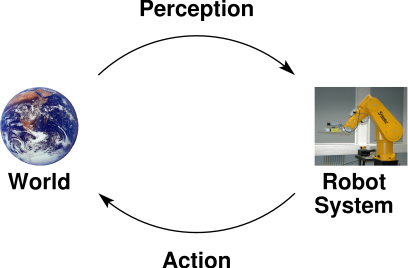

Perception-Action and Learning

Robot systems that are to operate in a unknown or changing environment need a

means to perceive the environment and how its actions change it. This

interaction can be modelled by a Perception-Action Cycle, or for

short: PAC. Our research is focused on ways to implement these PACs

to develop "intelligent" robot systems. Our methods combine approaches from

engineering (feedback control), mathematics (control theory and optimisation) and

computer science/biology (neural networks and evolutionary methods).

Contents of this page

2 Project 1 - Visual Servoing in 6 DOF using a Trust-Region Method

In this project an

Image-based Visual Servoing robot controller was

designed, implemented and validated.

In order to be able to manipulate an object the controller's task is to

move the robot end-effector into a desired pose relative to the object.

The location of the object in robot space is unknown.

Only image data from a CCD camera attached to the robot's end-effector

(

eye in hand) is used to calculate robot movements as controller

output.

Image features corresponding to the desired pose were determined by moving

the robot there and acquiring an image (

teaching by showing).

The object carries a label with 4 markings.

The difference between their actual and desired positions in the image

(image error) is the only controller input.

The implemented algorithm is of the type

image-based static

look and move.

Because of their robustness towards model errors, applications for

image-based robot controllers include autonomous robot systems.

An example is the University of Bremen's

FRIEND system to

support disabled people where a version of this controller has been ported to.

A serious problem of previously implemented controllers is the handling

of errors in the system model, particularly external camera parameters.

Especially with an eye in hand camera, resulting large inaccurate robot

movements can produce undesired effects.

Apart from the danger of hitting objects or people inside the robot

workspace object markings are often no longer visible in the image and the

controller does not converge.

Using a Trust Region Method to determine its output, the newly

developed visual servoing controller prevents these problems.

This is done by measuring model errors and automatically adapting a

maximum step length for the controller.

By taking as long steps as possible while maintaining convergence the new

controller guarantees a successful visual servoing process.

At the same time the number of steps required to move the robot into the

desired pose is kept very small.

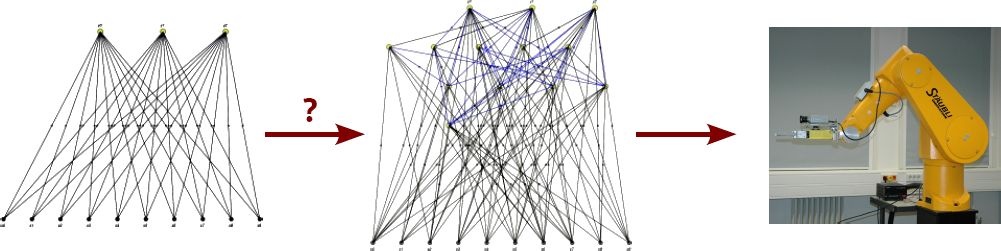

3 Project 2 - Evolutionary Learning of Neural Networks for PAC Control

Yohannes Kassahun has introduced the method "EANT", "Evolutionary Learning of Neural Topologies"

in his PhD work in our research group during the time 2003-2006. Since then it has been

further developed and applied to learning neural controllers for visual servoing.

4 Project 3 - The EC Project COSPAL

The European Framework 6 Project COSPAL (July 2004 to June 2007) is concerned with the

development of a system design for systems which combine perception and action

capabilities to solve complex planning and manipulation tasks. Methods using

supervised, unsupervised and reinforcement learning are employed. An important

focus is also on incremental learning such that the system can adapt to new

situations and tasks during its lifetime. The learning methods are based,

on Artificial Neural Networks (e.g. DCS Networks with online learning

capabilities), Associative Networks and others.

For the development and testing of the newly developed methods we are

implementing a demonstrator system that solves a shape-sorting puzzle like the

ones used as toys. The system has several layers of abstraction, with the

highest being a symbolic processing unit. At each layer a Perception-Action

Cycle (PAC) can be identified, building a hierarchy of PACs. While traditional

methods (or a 3-year old child) could be used to solve the shape-sorting task

it is our goal to use only methods that learn how these PACs are implemented.

The task of our group, the

Cognitive Systems Group of the

Christian-Albrechts-University

of Kiel, is to implement learning methods for those parts of the system

closest to the robot system hardware. The perception/action modules and

controls learned by our group are those for detecting objects and extracting

features from images, classifying these features and moving the robot (long

range movement, alignment, obstacle avoidance). An emphasis is also placed on

learning to imitate human-like movement while solving the task.

Author of these pages:

Nils T Siebel.

Last modified on Wed May 26 2010.

This page and all files in these subdirectories are Copyright © 2004-2010 Nils T Siebel, Berlin, Germany.