Nils T Siebel

Evolutionary Reinforcement Learning

This page gives an overview of EANT, a method to learn neural networks by evolutionary reinforcement learning

Evolutionary Acquisition of Neural Topologies, Version 2 (EANT2)

Contents of this page

1 Motivation

When a neural network is to be developed for a given problem, two aspects need

to be considered:

- What should the structure (or, topology) of the network be?

More precisely, how many neural nodes does the network need in order to

fulfil the demands of the given task, and what connections should be made

between these nodes?

- Given the structure of the neural network, what are the optimal values

for its parameters? This includes the weights of the connections and

possibly other parameters.

Traditionally the solution to aspect 1, the network's structure,

is found by trial and error, or somehow determined beforehand using

"intuition". Finding the solution to aspect 2, its parameters, is

therefore the only aspect that is usually considered in the literature. It

requires optimisation in a parameter space that can have a very high

dimensionality—for difficult tasks it can be up to several hundred. This

so-called "curse of dimensionality" is a significant obstacle in machine

learning problems. When training a network's parameters by examples (e.g.

supervised learning) it means that the number of training examples needed

increases exponentially with the dimension of the parameter space. When using

other methods of determining the parameters (e.g. reinforcement learning, as

it is done here) the effects are different but equally detrimental. Most

approaches for determining the parameters use backpropagation or similar

methods that are, in effect, simple stochastic gradient descent optimisation

algorithms.

2 Our Approach: EANT

In our opinion, the traditional approach described above has the following

deficiencies:

- The common approach to pre-design the network structure is difficult or

even infeasible for complicated tasks. It can also result in overly complex

networks if the designer cannot find a small structure that solves the

task.

- Determining the network parameters by local optimisation algorithms like

gradient descent-type methods is impracticable for large problems. It is

known from mathematical optimisation theory that these algorithms tend to get

stuck in local minima. They only work well with very simple (e.g., convex)

target functions or if an approximate solution is known beforehand.

In short, these methods lack generality and can therefore only be used to

design neural networks for a small class of tasks. They are engineering-type

approaches; there is nothing wrong with that if one needs to solve only a

single, more or less constant problem (The No Free Lunch Theorem states

that solutions that are specifically designed for a particular task always

perform better at this task than more general methods. However, they perform

worse on most or all other tasks, or if the task changes.) but it makes

them unsatisfactory from a scientific point of view.

We believe that the best way to overcome these deficiencies is to replace these

approaches by more general ones that are inspired by biology. Evolutionary

theory tells us that the structure of the brain has been developed over

a long period of time, starting from simple structures and getting more complex

over time. The connections between biological neurons are modified by

experience, i.e. learned and refined over a much shorter time span.

We have developed a method called

EANT,

Evolutionary Acquisition

of Neural Topologies, that works in very much the same way to create a

neural network as a solution to a given task. It is a very general learning

algorithm that does not use any pre-defined knowledge of the task or the

required solution. Instead, EANT uses

evolutionary search methods on

two levels:

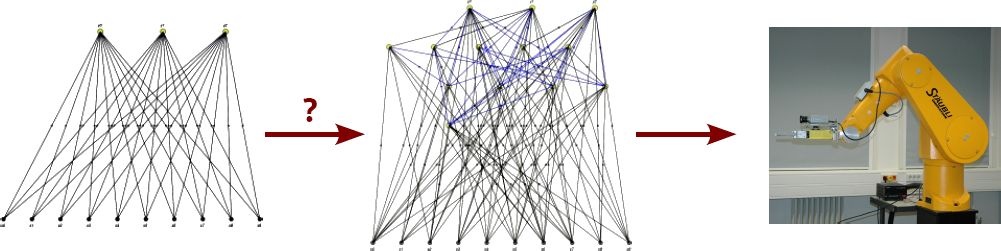

- In an outer optimisation loop called structural exploration new neural

structures are developed by gradually adding new structure to an initially

minimal network that is used as a starting point.

- In an inner optimisation loop called structural exploitation the

parameters of all currently considered structures are adjusted to

maximise the performance of the networks on the given task.

3 Applying EANT to Visual Servoing

EANT, originally developed by Yohannes Kassahun in the Cognitive Systems Group in Kiel

during the years 2003-2006, has since been improved by replacing the structural

exploitation loop by one that uses CMA-ES as its optimisation method, and by changing

the way structural changes are made.

Now it is in a state where it can, and has been, applied to very complex learning

problems. It is being further developed under the lead of Nils T Siebel in the same

research group.

The method has been tested with a simulation of a visual servoing setup. A robot

arm with an attached hand is to be controlled by the neural network to move to

a position where an object can be picked up. The only sensory data available

to the network is visual data from a camera that overlooks the scene. EANT was

used with a complete simulation of this visual servoing scenario to learn

networks by evolutionary reinforcement learning.

The results obtained with EANT were very good, with an EANT controller

of moderate size easily outperforming both traditional visual servoing and

other neural network learning approaches, even though the traditional controller

was given the true distance to the object in its Image Jacobian.

Author of these pages:

Nils T Siebel.

Last modified on Wed May 26 2010.

This page and all files in these subdirectories are Copyright © 2004-2010 Nils T Siebel, Berlin, Germany.